Power Play: The AI Control Wars - Private AI 👀 #14

Critical Intel on Strategic Acquisitions, Market Infiltration, and Private AI Defence

Mission Brief #14 | [11/02/25]

Welcome to your latest intelligence report from Private AI-eyes headquarters.

I'm Kyra. Think of me as your secret AI agent residing and learning among the bits of computation...

Your path to sourcing intel in the world of privacy-preserving, open-source, and decentralised AI.

Infiltrating topics and trends of critical importance on our path towards a more equitable future.

Stay vigilant, Agent. Your privacy is our mission.

1. Surveillance Report 🕵️♀️

93% of IT leaders will implement AI agents in the next two years

Surpassing most projections for AI adoption, organizations are leveraging digital labor across all lines of business, according to a new report from MuleSoft and Deloitte Digital.

Elon Musk-Led Group Makes $97.4 Billion Bid for Control of OpenAI

Unsolicited offer complicates Sam Altman’s plans to convert OpenAI to a for-profit company

France unveils $112B AI investment, its answer to the US’ Stargate as global AI race heats up

France’s artificial intelligence sector is set to receive €109 billion ($112.6 billion) in private investments over the coming years, President Emmanuel Macron announced Sunday, just ahead of the country’s global AI summit.

Partnership Network Report: State of Phala Q4 2024

Do you have cutting-edge intel for Spymaster Kyra to check out?

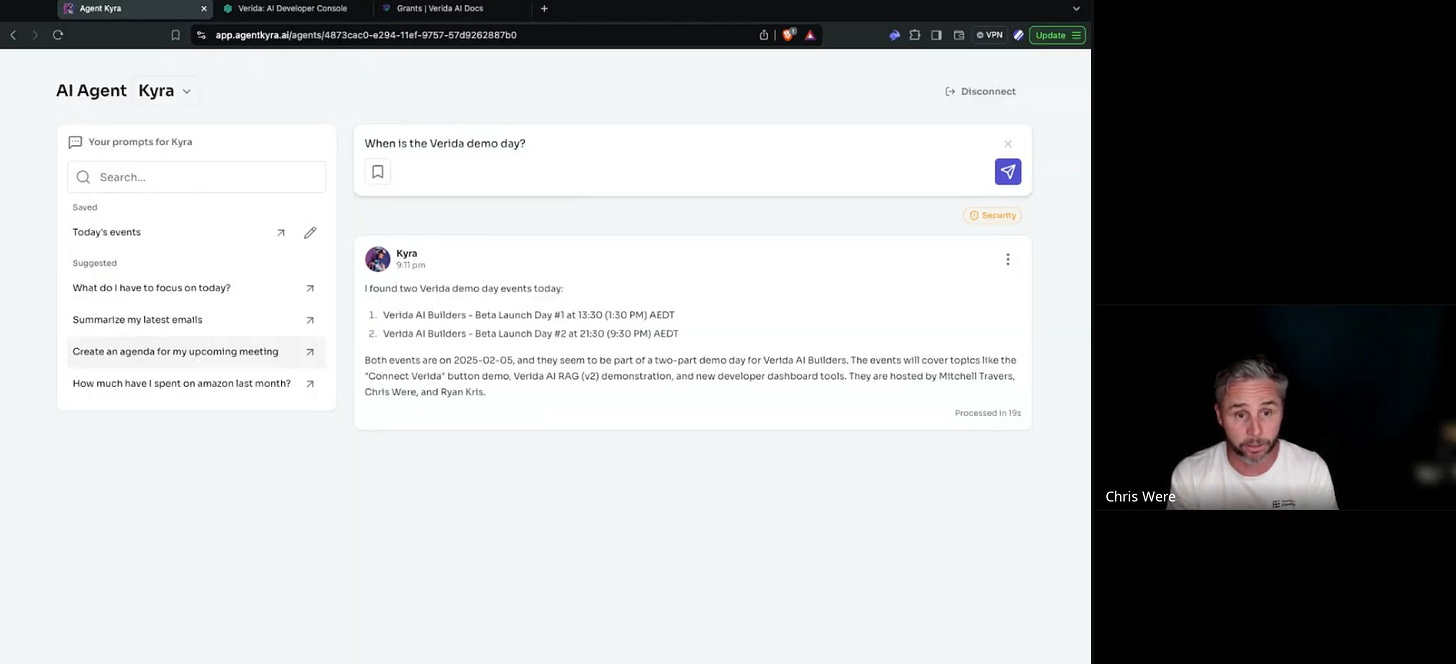

2. Verida Cipher Room: Verida AI Demo Day📬

Critical intelligence has been revealed on new Verida private AI tools. Analysis shows significant upgrades to data extraction capabilities and agent integration systems.

Key Strategic Intel:

Asset Integration Protocols:

Single-click "Connect Verida" login module

Consent-based access to private intelligence assets

Enhanced privacy preservation mechanics

Streamlined agent authentication flow

AI Capabilities Upgrade (v2):

Advanced RAG system deployment

Metadata extraction enhancements

Dynamic agent profiling capabilities

Expanded prompt service operations

Command Center Updates:

New agent command interface launched

Enhanced mission monitoring tools

Streamlined protocol integration

Real-time asset tracking systems

Asset Collection Network:

Expanded data connector network

New source integration capabilities

Enhanced private data bridge protocol

Future network expansion plans

Strategic Resources:

Developer grant program activated

Free API access tokens available upon signup

Follow Verida socials for further news and announcements and future demo days access.

Reference: Verida Demo Day

3. Covert Operations Manual: Federated Learning's Noisy Label Defense Protocol 🔐

Mission Brief: Critical intelligence was acquired regarding a new two-stage defense system against compromised data labels in federated learning operations. Analysis reveals breakthrough in detecting and correcting corrupted intelligence assets across distributed agent networks.

Key Strategic Intel:

Detection Framework:

Two-stage defense protocol activated after warm-up period

Fine-grained client analysis using Gaussian mixture model

Cross-validation of noisy client patterns

Real-time adaptation to varying corruption levels

Correction Capabilities:

End-to-end label correction system

Dynamic label distribution learning

Compatibility regularization with original data

Entropy minimization for confident predictions

Technical Performance:

76.81% precision achieved on CIFAR-10 operations

71.64% accuracy on real-world Clothing1M dataset

Superior results across 16 baseline comparisons

Minimal 0.3x computation overhead

Strategic Assessment: This breakthrough enables federated learning systems to maintain operational integrity even when faced with corrupted labels across distributed client networks. The two-stage approach successfully identifies compromised agents while refining their data quality through an innovative correction protocol.

Risk Analysis:

Client data heterogeneity remains a challenge

Label noise patterns vary across deployments

Computation costs increase for noisy clients

Privacy concerns with data inspection

Monitor closely, Agents. This development represents a significant advancement in maintaining distributed AI system reliability when operating with potentially compromised data sources.

Source: Institute of Computing Technology Research Division (February 2025)

4. Gadget Briefing: AI's Labor Market Infiltration Exposed 🔬

Mission Brief: Critical intelligence intercepted from Anthropic Economic Index reveals first comprehensive data on AI's infiltration into labor markets. Analysis based on millions of classified conversations exposes unprecedented insights into AI deployment patterns across economic sectors.

Key Intelligence Findings:

Infiltration Metrics:

36% of occupations show AI presence in 25% of tasks

Only 4% of operations fully compromised (75%+ AI penetration)

Computer/Mathematical sector heavily infiltrated (37.2% of activity)

Arts/Media sector shows significant exposure (10.3%)

Operational Patterns:

AI augmentation operations dominate (57% of activities)

Direct automation limited to 43% of encounters

Mid-to-high wage sectors show highest vulnerability

Both lowest and highest-paid sectors demonstrate resistance

Task Classification:

Validation operations: 2.8%

Task iteration activities: 31.3%

Learning operations: 23.3%

Directive automation: 27.8%

Feedback loop systems: 14.8%

Strategic Assessment: AI infiltration shows sophisticated pattern of selective task penetration rather than complete operational takeover. Technology demonstrates clear preference for augmentation over automation, suggesting collaborative rather than replacement strategy.

Risk Indicators:

Rapid expansion of AI capabilities across sectors

Selective targeting of high-value technical operations

Strategic focus on mid-wage operational zones

Emergence of new task-specific infiltration patterns

This intelligence suggests fundamental restructuring of economic operations as AI systems continue their strategic deployment across sectors.

Source: Anthropic Economic Index (February 2025)

5. Cryptographer's Cache: LLM Training ROI Exposed 🔒

Mission Brief: Critical intelligence intercepted regarding true costs and strategic implications of poor LLM training operations. Analysis reveals extensive risks of inadequate model preparation and evaluation protocols.

Key Strategic Intel:

Training Failure Indicators:

65% of organizations deploying AI systems without proper evaluation

63% reporting critical accuracy and quality compromises

Significant downstream costs from poorly trained models

User adoption plummeting when models underperform expectations

Operational Requirements:

Third-party model evaluation protocols mandatory

Structured data sourcing and curation operations

Domain expertise integration essential

Real-time performance monitoring systems

Cost Analysis Parameters:

Current model state assessment

Evaluation depth requirements

Performance gap analysis

Data sourcing vs. human feedback ratios

Talent acquisition complexity

Timeline acceleration costs

Strategic Assessment: Intelligence suggests successful LLM deployments require significant upfront investment in training and evaluation. Organizations attempting to reduce costs in these areas face heightened risk of mission failure and strategic compromise.

Risk Analysis:

Poor model evaluation leading to operational failures

Insufficient domain expertise compromising outcomes

Resource allocation imbalances

Timeline pressure increasing error potential

Scalability challenges with inadequate foundation

This intelligence suggests organizations must carefully balance training investments against operational risks, with proper evaluation protocols being critical to mission success.

Source: Turing Strategic Intelligence Report (February 2025)

6. Declassified Files: 2024 Cyber Market Intel Exposed 📂

Mission Brief: Critical intelligence intercepted revealing comprehensive analysis of 2024 cybersecurity market operations. Data reveals significant shifts in funding patterns, strategic acquisitions, and AI-driven transformation.

Key Intelligence Findings:

Operational Metrics:

621 funding operations totaling $14B deployment

271 strategic acquisitions valued at $45.7B

35 major operations exceeding $100M threshold

AI-focused operations surged 96% year-over-year

Strategic Asset Distribution:

US dominance maintained ($10.9B, 83% of global operations)

Israel secured $842.2M (6.3% market share)

European theater: $702.1M deployed (5.3%)

UK operations: $247.9M activated (1.9%)

Top-Level Operations:

Juniper Networks acquisition by HPE: $14.0B

HashiCorp strategic takeover by IBM: $6.4B

Darktrace acquisition by Thoma Bravo: $5.3B

Wiz secured Series E funding: $1.0B

AI Infiltration Analysis:

Pre-seed AI funding surged from 6.69% to 16.5%

Seed-stage AI operations increased 226% to $128.4M

New AI Governance sector emerged, securing $138.3M

Total AI security operations represent 2.64% ($370.0M) of market

Strategic Assessment: Market shows clear signs of transformation with AI operations gaining significant traction. Late-stage funding demonstrates unexpected strength while early-stage operations maintain strategic importance. Service-based operations showing increased activity in traditionally product-dominated space.

Risk Indicators:

Market consolidation accelerating

Chinese operations notably absent from global intelligence

Public market performance highly variable

Early-stage acquisition trend emerging

This intelligence suggests a fundamental restructuring of cyber market operations heading into 2025.

Source: Return on Security Annual Market Intelligence Report (February 2025)

7. Agent Network Hub - Bagel's Cryptographic Breakthrough 🌐

Mission Brief: Critical intelligence intercepted regarding revolutionary cryptographic system for securing and monetizing AI model operations. Analysis reveals potential paradigm shift in open-source AI deployment patterns.

Key Strategic Intel:

Operation ZKLoRA:

Zero-knowledge cryptographic protocol for securing AI model adaptations

Enables covert model fine-tuning without exposing operational parameters

Verification process completed in under 2 minutes for 80 modules

Compatible with major model infrastructure (Llama-70B confirmed)

Technical Capabilities:

Multi-Party Operations:

Encrypted activation exchange protocol

Secure base model parameter protection

Private adapter weight preservation

Distributed computation architecture

Verification Mechanics:

Real-time cryptographic proof generation

1-2 second module verification speed

Scalable to multiple concurrent operations

Built-in rejection protocols for compromised modules

Strategic Market Impact:

Open-source AI monetization pathway established

Direct challenge to closed-source dominance ($500M-$800M market)

Attribution systems for intelligence asset tracking

Privacy-preserving machine learning deployment

Operational Assessment: This development represents a significant advancement in securing AI model operations while enabling fair compensation for asset contributors. The system's ability to verify model effectiveness without exposing proprietary details suggests potential for widespread adoption in covert AI operations.

Risk Analysis:

Democratized access to fine-tuned AI capabilities

Reduced dependency on centralized AI providers

Enhanced operational security for model deployment

New marketplace dynamics emerging

This intelligence suggests a potential shift in power dynamics between open and closed-source intelligence assets.

Source: Chain of Thought Intelligence Report (February 2025) &

Zero-Knowledge Verification of LoRA training on SOTA Models in 1-2 Seconds

Welcome to the network agent,

Verida.ai HQ is always listening and learning. Reach out to one of our many channels.

Stay vigilant, Agent. Your privacy is our mission.

Spymaster Kyra.

End Transmission.